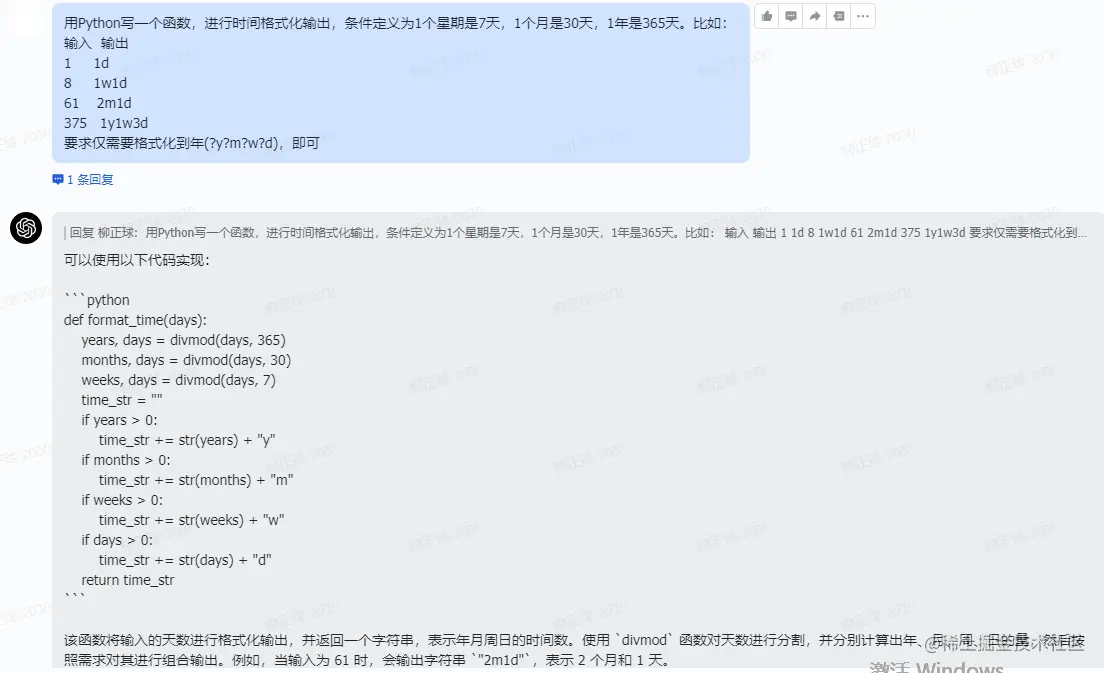

一、用ChatGPT直接生成的测试用例

要写测试,我们要先有一个程序。为了避免这个题目本身就在AI的训练数据集里面,它直接知道答案。

我们用一个有意思的小题目,也就是让Python根据我们输入的一个整数代表的天数,格式化成一段自然语言描述的时间。条件定义:1个星期是7天,1个月是30天,1年是365天。比如,输入1就返回1d,输入8就返回1w1d,输入32就返回1m2d,输入375就返回1y1w3d。

需求:

js复制代码用Python写一个函数,进行时间格式化输出,条件定义为1个星期是7天,1个月是30天,1年是365天。比如:

输入 输出

1 1d

8 1w1d

61 2m1d

375 1y1w3d

要求仅需要格式化到年(?y?m?w?d),即可

我们直接让ChatGPT把程序写好如下:

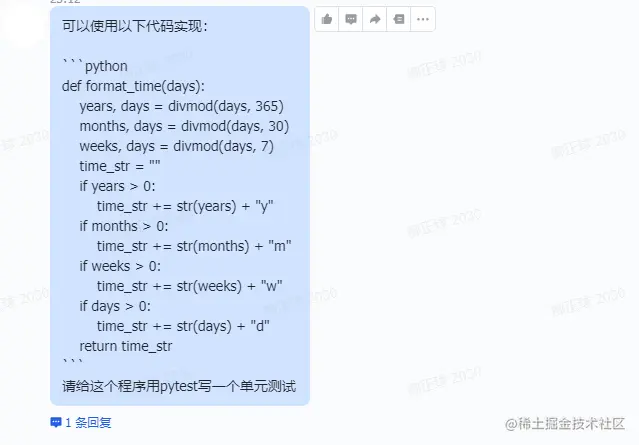

既然ChatGPT可以写代码,自然也可以让它帮我们把单元测试也写好,如下:

这个测试用例覆盖的场景其实已经很全面了,既包含了基本的功能验证测试用例,也包含了一些异常的测试用例。

二、基于Openai接口进行过程验证

2.1、分解步骤写Prompts

OpenAI的示例给出了很好的思路,那就是把问题拆分成多个步骤。

- 把代码交给大语言模型,让大语言模型解释一下,这个代码是在干什么。

- 把代码和代码的解释一起交给大语言模型,让大语言模型规划一下,针对这个代码逻辑,我们到底要写哪几个TestCase。如果数量太少,可以重复让AI多生成几个TestCase。

- 针对TestCase的详细描述,再提交给大语言模型,让它根据这些描述生成具体的测试代码。对于生成的代码,我们还要进行一次语法检查,如果语法检查都没法通过,我们就让AI重新再生成一下。

2.2、请AI解释要测试的代码

ini复制代码import openai

def gpt35(prompt, model="text-davinci-002", temperature=0.4, max_tokens=1000,

top_p=1, stop=["nn", "ntn", "n n"]):

response = openai.Completion.create(

model=model,

prompt=prompt,

temperature=temperature,

max_tokens=max_tokens,

top_p=top_p,

stop=stop

)

message = response["choices"][0]["text"]

return message

code = """

def format_time(days):

years, days = divmod(days, 365)

months, days = divmod(days, 30)

weeks, days = divmod(days, 7)

time_str = ""

if years > 0:

time_str += str(years) + "y"

if months > 0:

time_str += str(months) + "m"

if weeks > 0:

time_str += str(weeks) + "w"

if days > 0:

time_str += str(days) + "d"

return time_str

"""

def explain_code(function_to_test, unit_test_package="pytest"):

prompt = f""""# How to write great unit tests with {unit_test_package}

In this advanced tutorial for experts, we'll use Python 3.8 and `{unit_test_package}` to write a suite of unit tests to verify the behavior of the following function.

```python

{function_to_test}

Before writing any unit tests, let's review what each element of the function is doing exactly and what the author's intentions may have been.

- First,"""

response = gpt35(prompt)

return response, prompt

code_explaination, prompt_to_explain_code = explain_code(code)

print(code_explaination)

首先定义了一个gpt35的函数,这个函数的作用如下:

- 使用 text-davinci-002 模型,这是一个通过监督学习微调的生成文本的模型,希望生成目标明确的文本代码解释。

- 对 stop 做了特殊的设置,只要连续两个换行或者类似连续两个换行的情况出现,就中止数据的生成,避免模型一口气连测试代码也生成出来。

然后,通过一组精心设计的提示语,让GPT模型为我们来解释代码。

- 指定使用pytest的测试包。

- 把对应的测试代码提供给GPT模型。

- 让AI回答,要精确描述代码做了什么。

- 最后用 “-First” 开头,引导GPT模型,逐步分行描述要测试的代码。

输出结果:

js复制代码 the function takes an integer value representing days as its sole argument.

- Next, the `divmod` function is used to calculate the number of years and days, the number of months and days, and the number of weeks and days.

- Finally, a string is built up and returned that contains the number of years, months, weeks, and days.

2.3、让AI根据代码解释制定测试计划

ini复制代码def generate_a_test_plan(full_code_explaination, unit_test_package="pytest"):

prompt_to_explain_a_plan = f"""

A good unit test suite should aim to:

- Test the function's behavior for a wide range of possible inputs

- Test edge cases that the author may not have foreseen

- Take advantage of the features of `{unit_test_package}` to make the tests easy to write and maintain

- Be easy to read and understand, with clean code and descriptive names

- Be deterministic, so that the tests always pass or fail in the same way

`{unit_test_package}` has many convenient features that make it easy to write and maintain unit tests. We'll use them to write unit tests for the function above.

For this particular function, we'll want our unit tests to handle the following diverse scenarios (and under each scenario, we include a few examples as sub-bullets):

-"""

prompt = full_code_explaination+prompt_to_explain_a_plan

response = gpt35(prompt)

return response, prompt

test_plan, prompt_to_get_test_plan = generate_a_test_plan(prompt_to_explain_code+code_explaination)

print(test_plan)

针对生成的测试计划,对AI制定了几点要求:

- 测试用例要覆盖更广的范围。

- 测试用例的边界要涉及到作者无法想到的场景。

- 充分利用pytest的特性。

- 确保测试用例简洁、易理解。

- 测试用例的结果是确定的,要么成功、要么失败。

输出结果:

js复制代码 Normal inputs:

- `days` is a positive integer

- `days` is 0

- Edge cases:

- `days` is a negative integer

- `days` is a float

- `days` is a string

- Invalid inputs:

- `days` is `None`

- `days` is a list

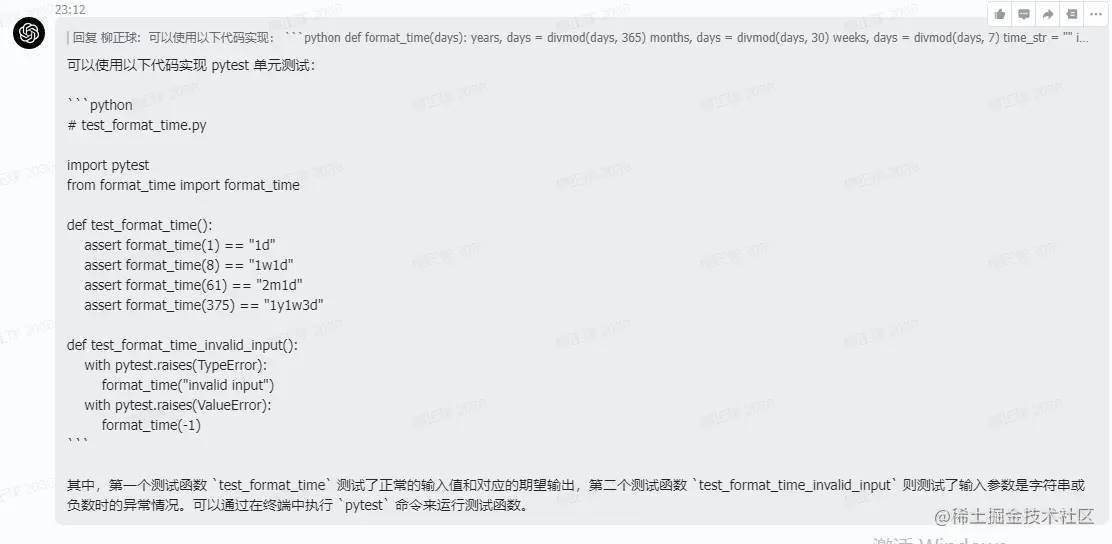

2.4、根据测试计划生成测试代码

ini复制代码def generate_test_cases(function_to_test, unit_test_package="pytest"):

starter_comment = "Below, each test case is represented by a tuple passed to the @pytest.mark.parametrize decorator"

prompt_to_generate_the_unit_test = f"""

Before going into the individual tests, let's first look at the complete suite of unit tests as a cohesive whole. We've added helpful comments to explain what each line does.

```python

import {unit_test_package} # used for our unit tests

{function_to_test}

#{starter_comment}"""

full_unit_test_prompt = prompt_to_explain_code + code_explaination + test_plan + prompt_to_generate_the_unit_test

return gpt35(model="text-davinci-003", prompt=full_unit_test_prompt, stop="```"), prompt_to_generate_the_unit_test

unit_test_response, prompt_to_generate_the_unit_test = generate_test_cases(code)

print(unit_test_response)

输出结果:

js复制代码@pytest.mark.parametrize("days, expected", [

(1, "1d"), # normal input

(7, "1w"), # normal input

(30, "1m"), # normal input

(365, "1y"), # normal input

(731, "2y"), # normal input

(-1, pytest.raises(ValueError)), # abnormal input

(0, pytest.raises(ValueError)), # abnormal input

(1.5, pytest.raises(TypeError)), # abnormal input

("1", pytest.raises(TypeError)), # abnormal input

])

def test_format_time(days, expected):

"""

Test the format_time() function.

"""

if isinstance(expected, type):

# check that the expected result is a type, i.e. an exception

with pytest.raises(expected):

# if so, check that the function raises the expected exception

format_time(days)

else:

# otherwise, check that the function returns the expected value

assert format_time(days) == expected

2.5、通过AST库进行语法检查

最后我们最好还是要再检查一下生成的测试代码语法,这个可以通过Python的AST库来完成。检查代码的时候,我们不仅需要生成的测试代码,也需要原来的功能代码,不然无法通过语法检查。

js复制代码

import ast

code_start_index = prompt_to_generate_the_unit_test.find("```pythonn") + len("```pythonn")

code_output = prompt_to_generate_the_unit_test[code_start_index:] + unit_test_response

try:

ast.parse(code_output)

except SyntaxError as e:

print(f"Syntax error in generated code: {e}")

print(code_output)

输出结果:

js复制代码import pytest # used for our unit tests

def format_time(days):

years, days = divmod(days, 365)

months, days = divmod(days, 30)

weeks, days = divmod(days, 7)

time_str = ""

if years > 0:

time_str += str(years) + "y"

if months > 0:

time_str += str(months) + "m"

if weeks > 0:

time_str += str(weeks) + "w"

if days > 0:

time_str += str(days) + "d"

return time_str

#Below, each test case is represented by a tuple passed to the @pytest.mark.parametrize decorator.

#The first element of the tuple is the name of the test case, and the second element is a list of arguments to pass to the function.

#The @pytest.mark.parametrize decorator allows us to write a single test function that can be used to test multiple input values.

@pytest.mark.parametrize("test_input,expected", [

("Valid Inputs", [

(0, "0d"), # test for 0 days

(1, "1d"), # test for 1 day

(7, "7d"), # test for 7 days

(30, "1m"), # test for 30 days

(365, "1y"), # test for 365 days

(400, "1y35d"), # test for 400 days

(800, "2y160d"), # test for 800 days

(3650, "10y"), # test for 3650 days

(3651, "10y1d"), # test for 3651 days

]),

("Invalid Inputs", [

("string", None), # test for string input

([], None), # test for list input

((), None), # test for tuple input

({}, None), # test for set input

({1: 1}, None), # test for dictionary input

(1.5, None), # test for float input

(None, None), # test for None input

]),

("Edge Cases", [

(10000000000, "274247y5m2w6d"), # test for large positive integer

(1, "1d"), # test for small positive integer

(-10000000000, "-274247y5m2w6d"), # test for large negative integer

(-1, "-1d") # test for small negative integer

])

])

def test_format_time(test_input, expected):

# This test function uses the @pytest.mark.parametrize decorator to loop through each test case.

# The test_input parameter contains the name of the test case, and the expected parameter contains a list of arguments to pass to the function.

# The test_input parameter is not used in the test, but is included for readability.

for days, expected_result in expected:

# For each argument in the expected parameter, we call the format_time() function and compare the result to the expected result.

assert format_time(days) == expected_result

从上面看到有些测试用例跟预期还是有差距的,比如:

js复制代码@pytest.mark.parametrize("test_input,expected", [

("Valid Inputs", [

(7, "7d" -> "1w"), # test for 7 days

(30, "1m"), # test for 30 days

(365, "1y"), # test for 365 days

(400, "1y35d" -> "1y1m5d"), # test for 400 days

(800, "2y160d" -> "2y5m1w3d"), # test for 800 days

(3650, "10y"), # test for 3650 days

(3651, "10y1d"), # test for 3651 days

]),

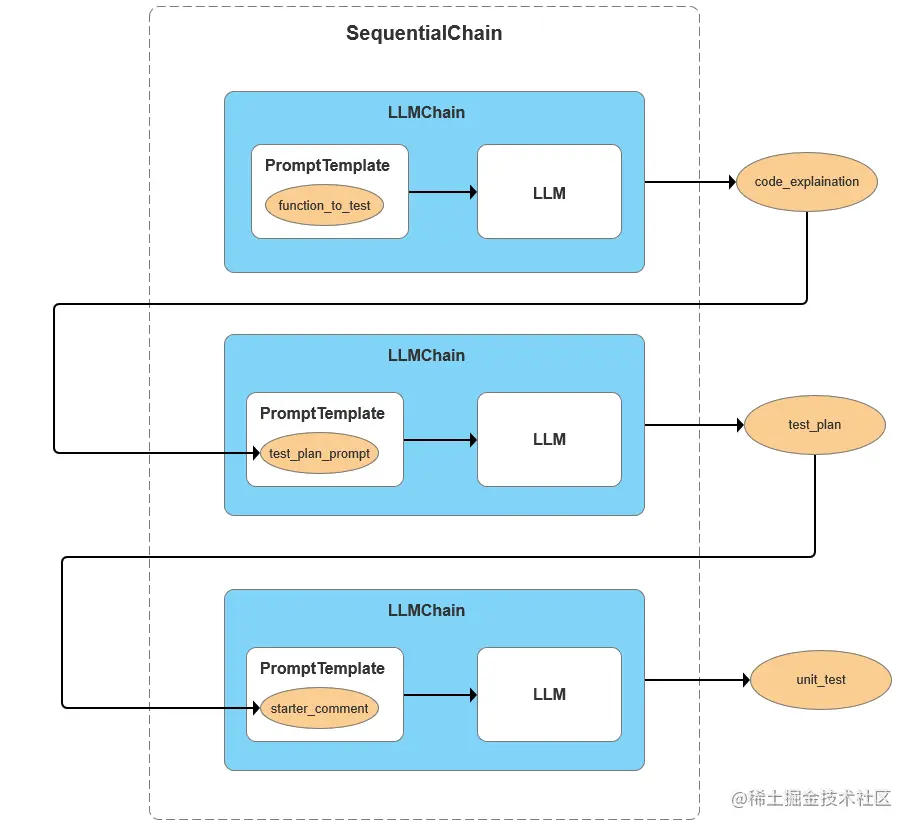

三、用LangChain进一步封装

OpenAI 的大语言模型,只是提供了简简单单的 Completion 和 Embedding 这样两个核心接口,通过合理使用这两个接口,我们完成了各种各样复杂的任务。

- 通过提示语(Prompt)里包含历史的聊天记录,我们能够让 AI 根据上下文正确地回答问题。

- 通过将 Embedding 提前索引好存起来,我们能够让 AI 根据外部知识回答问题。

- 而通过多轮对话,将 AI 返回的答案放在新的问题里,我们能够让 AI 帮我们给自己的代码撰写单元测试。

llama-index 专注于为大语言模型的应用构建索引,虽然 Langchain 也有类似的功能,但这一点并不是 Langchain 的主要卖点。Langchain 的第一个卖点其实就在它的名字里,也就是链式调用。

3.1、通过 Langchain 实现自动化撰写单元测试

上面通过多步提示语自动给代码写单元测试。Langchain可以顺序地通过多个Prompt调用OpenAI的GPT模型,这个能力用来实现自动化测试的功能正好匹配。

ini复制代码from langchain import PromptTemplate, OpenAI, LLMChain

from langchain.chains import SequentialChain

import ast

def write_unit_test(function_to_test, unit_test_package="pytest"):

# 解释源代码的步骤

explain_code = """"# How to write great unit tests with {unit_test_package}

In this advanced tutorial for experts, we'll use Python 3.8 and `{unit_test_package}` to write a suite of unit tests to verify the behavior of the following function.

```python

{function_to_test}

```

Before writing any unit tests, let's review what each element of the function is doing exactly and what the author's intentions may have been.

- First,"""

explain_code_template = PromptTemplate(

input_variables=["unit_test_package", "function_to_test"],

template=explain_code

)

explain_code_llm = OpenAI(model_name="text-davinci-002", temperature=0.4, max_tokens=1000,

top_p=1, stop=["nn", "ntn", "n n"])

explain_code_step = LLMChain(llm=explain_code_llm, prompt=explain_code_template, output_key="code_explaination")

# 创建测试计划示例的步骤

test_plan = """

A good unit test suite should aim to:

- Test the function's behavior for a wide range of possible inputs

- Test edge cases that the author may not have foreseen

- Take advantage of the features of `{unit_test_package}` to make the tests easy to write and maintain

- Be easy to read and understand, with clean code and descriptive names

- Be deterministic, so that the tests always pass or fail in the same way

`{unit_test_package}` has many convenient features that make it easy to write and maintain unit tests. We'll use them to write unit tests for the function above.

For this particular function, we'll want our unit tests to handle the following diverse scenarios (and under each scenario, we include a few examples as sub-bullets):

-"""

test_plan_template = PromptTemplate(

input_variables=["unit_test_package", "function_to_test", "code_explaination"],

template=explain_code+"{code_explaination}"+test_plan

)

test_plan_llm = OpenAI(model_name="text-davinci-002", temperature=0.4, max_tokens=1000,

top_p=1, stop=["nn", "ntn", "n n"])

test_plan_step = LLMChain(llm=test_plan_llm, prompt=test_plan_template, output_key="test_plan")

# 撰写测试代码的步骤

starter_comment = "Below, each test case is represented by a tuple passed to the @pytest.mark.parametrize decorator"

prompt_to_generate_the_unit_test = """

Before going into the individual tests, let's first look at the complete suite of unit tests as a cohesive whole. We've added helpful comments to explain what each line does.

```python

import {unit_test_package} # used for our unit tests

{function_to_test}

#{starter_comment}"""

unit_test_template = PromptTemplate(

input_variables=["unit_test_package", "function_to_test", "code_explaination", "test_plan", "starter_comment"],

template=explain_code+"{code_explaination}"+test_plan+"{test_plan}"+prompt_to_generate_the_unit_test

)

unit_test_llm = OpenAI(model_name="text-davinci-002", temperature=0.4, max_tokens=1000, stop="```")

unit_test_step = LLMChain(llm=unit_test_llm, prompt=unit_test_template, output_key="unit_test")

sequential_chain = SequentialChain(chains=[explain_code_step, test_plan_step, unit_test_step],

input_variables=["unit_test_package", "function_to_test", "starter_comment"],

verbose=True)

answer = sequential_chain.run(unit_test_package=unit_test_package, function_to_test=function_to_test,

starter_comment=starter_comment)

return f"""#{starter_comment}"""+answer

code = """

def format_time(days):

years, days = divmod(days, 365)

months, days = divmod(days, 30)

weeks, days = divmod(days, 7)

time_str = ""

if years > 0:

time_str += str(years) + "y"

if months > 0:

time_str += str(months) + "m"

if weeks > 0:

time_str += str(weeks) + "w"

if days > 0:

time_str += str(days) + "d"

return time_str

"""

def write_unit_test_automatically(code, retry=3):

unit_test_code = write_unit_test(code)

all_code = code+unit_test_code

tried = 0

while tried < retry:

try:

ast.parse(all_code)

return all_code

except SyntaxError as e:

print(f"Syntax error in generated code: {e}")

all_code = code+write_unit_test(code)

tried += 1

print(write_unit_test_automatically(code))

输出:

js复制代码def format_time(days):

years, days = divmod(days, 365)

months, days = divmod(days, 30)

weeks, days = divmod(days, 7)

time_str = ""

if years > 0:

time_str += str(years) + "y"

if months > 0:

time_str += str(months) + "m"

if weeks > 0:

time_str += str(weeks) + "w"

if days > 0:

time_str += str(days) + "d"

return time_str

#Below, each test case is represented by a tuple passed to the @pytest.mark.parametrize decorator.

#The first element of the tuple is the name of the test case, and the second element is a list of tuples.

#Each tuple in the list of tuples represents an individual test.

#The first element of each tuple is the input to the function (days), and the second element is the expected output of the function.

@pytest.mark.parametrize('test_case_name, test_cases', [

# Test cases for when the days argument is a positive integer

('positive_int', [

(1, '1d'),

(10, '10d'),

(100, '1y3m2w1d')

]),

# Test cases for when the days argument is 0

('zero', [

(0, '')

]),

# Test cases for when the days argument is negative

('negative_int', [

(-1, '-1d'),

(-10, '-10d'),

(-100, '-1y-3m-2w-1d')

]),

# Test cases for when the days argument is not an integer

('non_int', [

(1.5, pytest.raises(TypeError)),

('1', pytest.raises(TypeError))

])

])

def test_format_time(days, expected_output):

# This test function is called once for each test case.

# days is set to the input for the function, and expected_output is set to the expected output of the function.

# We can use the pytest.raises context manager to test for exceptions.

if isinstance(expected_output, type) and issubclass(expected_output, Exception):

with pytest.raises(expected_output):

format_time(days)

else:

assert format_time(days) == expected_output

四、总结

想要通过大语言模型,完成一个复杂的任务,往往需要我们多次向 AI 提问,并且前面提问的答案,可能是后面问题输入的一部分。LangChain 通过将多个 LLMChain 组合成一个 SequantialChain 并顺序执行,大大简化了这类任务的开发工作。

![[Release] Tree Of Savior](https://www.nicekj.com/wp-content/uploads/replace/4f98107ca1ebd891ea38f390f1226e45.png)

![[一键安装] 手游-天道情缘](https://www.nicekj.com/wp-content/uploads/replace/b441383e0c7eb3e52c9980e11498e137.png)

![[一键安装] 霸王大陆EP8-5.0 虚拟机+源码+大背包+商城-最新整理](https://www.nicekj.com/wp-content/uploads/thumb/replace/fill_w372_h231_g0_mark_0d204bcf457d56afaeacf1e97e86ee45.png)

![[一键安装] 龙之谷手游飓风龙单机版一键端 完整GM后台局域网](https://www.nicekj.com/wp-content/uploads/thumb/replace/fill_w372_h231_g0_mark_77ae8bb495cba3dd592ef131cc7aea5f.jpeg)